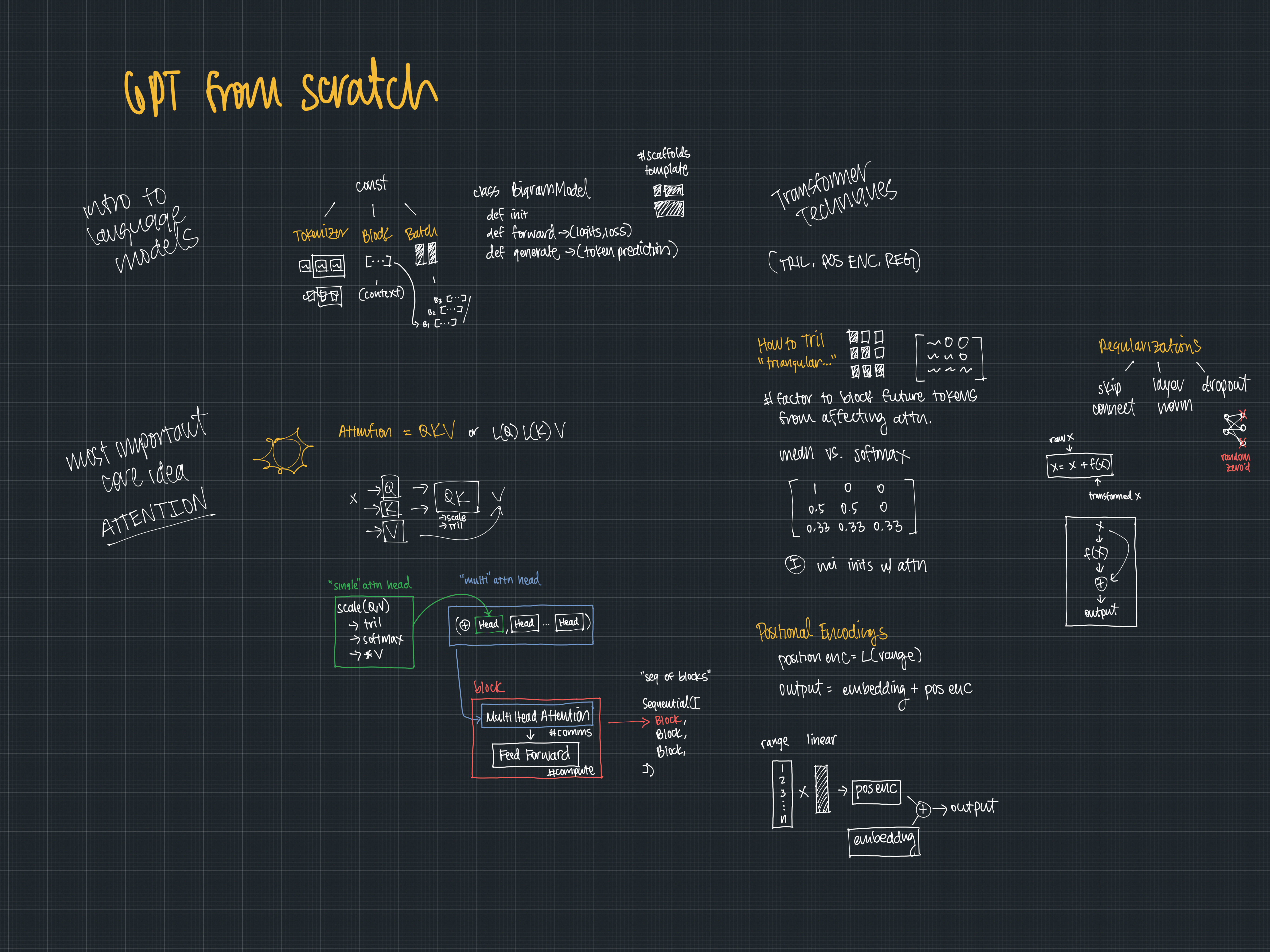

Andrej Karpathy has an incredible video on building GPT from scratch.

Here are cartoon notes covering the contents:

- tokenizer

- characters, sequences of chars become “tokens”. Many strategies for this. In this video we actually just use each character as a token.

- block size

- how many tokens are used for prediction?

- batch size

- how many blocks run in parallel to max speed/GPU usage

- BigramModel as model template

- every llm needs…

def forward -> (logits, loss)def generate

- tril

- “triangular… something”

- token prediction should only look at past tokens

- tril is a matrix operation to remove future tokens

- positional encoding

- add parameterized info about positions.

- Attention (“the most important part”)

- single attention:

- QKV

- more like: L(Q)L(K)V where L is Linear

- more like: softmax(tril(scale(L(Q),L(V))))V

- multi head attention

- Concat([Head 1, Head 2, … Head n])

- attention block

- Multi head attention -> Feed Forward

- sequence of blocks

- “Sequential([Block, Block, Block…])

- single attention:

- regularization techniques

- skip connections

- layer norm

- dropout